eBook

Governing Volume: Ensuring Trust and Quality in Big Data

Challenge: Ensuring High Quality Data as Volume and Variety Grows

The term “Big Data” doesn’t seem quite big enough to properly describe the vast overabundance of data available to organizations today. As the volume and variety of big data sources continue to grow, the level of trust in that data remains troublingly low. Business leaders have repeatedly expressed little confidence in the reliability of the data they use to run their business. In KPMG’s 2017 CEO Study, nearly half of CEO’s shared concern about the integrity of the data they base decisions on. Results from Precisely’s 2019 Enterprise Data Quality Survey suggests that trend continues:

of respondents had untrustworthy or inaccurate insights from analytics due to lack of quality.

do not have a process for applying data quality to the data in the data lake or enterprise data hub.

The very purpose of the data lake is to enable new levels of business insight and clarity. No one sets out to create a data swamp that provides nothing but confusion and distrust. Read on and discover how a strong focus on data quality spanning the people, processes and technology of your organization will help ensure trust and quality in your analytics that drive business decisions.

People: Empowering the Business to Use Big Data Without Barriers

Organizations must recognize that data scientists and analysts are one part of the equation for deriving value from big data. Of course, sales, marketing, operations, and others spanning all business functions also need quick and efficient access to trusted data for the organization to be successful.

For decades, the IT department “owned” the company data and fulfilled all requests for data from the rest of the organization – a process that cannot keep up with massive demand for vital information in today ’s big data world. Instead, many organizations are actively “democratizing” their big data.

For data democratization to be successful, organizations must ensure their data lake is filled with high-quality, well-governed data that is easy to find, easy to understand and easy to determine its quality and fitness for purpose.

Bernard Marr

Big Data analytics expert and author

Process: Making Big Data Fit For Purpose

Organizations must retain and cultivate the full context of their big data, to allow users to solve business problems, ask new questions, test hypotheses, evaluate outcomes and generate new reports and analyses supported by sound and fit for purpose data. Doing so requires effective data quality practices, which enables access to the data lake with an easy way for people to understand the data and its purpose.

Key capabilities supporting Data Quality include: Data Discovery, Data Quality Processing, and Operational Integrations with key business applications.

Technology: Scale Data Quality Processes with Automation

Big data quality requires a data quality tool that can meet the task of handling a growing volume of data. Trillium Quality offers industry-leading data profiling and data quality powered by the scalability and performance to deliver trusted business applications.

| Must-Know Data Questions | How Trillium Quality Provides Answers |

| What data do we have? | Trillium Quality automatically profiles your selected data sources, including: |

| Where is it?

What relationships and/or dependencies exist between our various data entities? |

|

| How can I determine the current level of our data quality? | Trillium Discovery Center enables end users and teams to rapidly access, understand and analyze data quality standards through a web browser user interface. No programming or IT assistance is required. |

| How can I identify and implement the data quality rules for monitoring improvement?</span | Using Trillium Discovery Center, users can also REST API’s to support bi-directional integration with data governance tools. |

- Parse data values from unstructured fields into useful, usable new attributes

- Verify and enrich global postal addresses

- Standardize values for matching and linking

- Enrich data with external, third-party sources to create comprehensive, unified records

- Consolidate and aggregate related data into a single, “golden” record as appropriate, based on factors such as data source, date, etc.

- Link records spanning multiple sources of data related to the same customer, product or other data entity

Trillium Quality lets you run your data quality jobs any where, including natively within big data execution frameworks such as Hadoop MapReduce and Spark. No user coding skills are required.

This is made possible by Precisely Intelligent Execution technology, which dynamically optimizes data processing at run-time based on the chosen compute framework – with no changes or tuning required.

Big Data Quality in Action: Customer 360

Enterprise data quality is essential to implement vital data lake use cases, such as creating a 360-degree view of the customer (or other type of business entities, such as product, supplier or partner). Whether for customer engagement programs such as Next Best Action; customer analytics on churn; detection of fraud; compliance; or other use cases, Trillium Quality helps ensure the highest accuracy in entity resolution.

Precisely Trillium’s data profiling, quality, and enrichment functionality combine to deliver a common, unified, trusted view of customer data, across all systems and sources.

Fueling Enterprise Data Governance with Quality Data

We are operating in a world where our expectations about what data can do are growing while at the same time trust and confidence in data is declining.

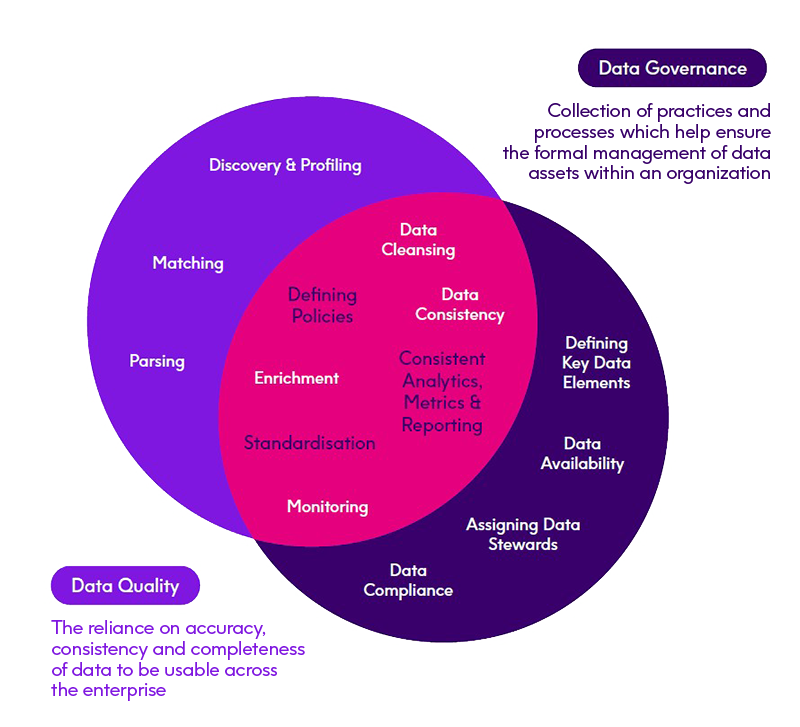

Organizations are implementing data governance best practices to help drive business advantage, improve customer experience, better supplier relations and meet regulator y compliance. But the effectiveness of these practices is tied to the quality of data used.

Trillium Quality offers a REST API that enables tight integration with data governance systems. For example, data governance policy definitions can be linked to results of

Trillium Quality’s business rules, for consistent tests that ensure those rules are being enforced in data management.

Learn how to strengthen your overall data governance framework with improved data quality with this related ebook.